(These are some unfinished notes I’m not sure I’m ever going to finish, because ultimately chatGPT was not the thing I thought it was going to be. There’s lots to think about with chatGPT, but this isn’t it.)

There is a buzz around OpenAI’s chatGPT, a chatbot that can tell you facts about whatever you want, and tell you those facts in the style of human conversation. Within the education space, much of this buzz has taken the form of sheer panic, the potential horrors of students using this tool to complete homework giving academics sleepless nights, with numerous tools quickly popping up to check that chatGPT to check that students are not using the tool to cheat.

But what about the students thoughts on this tool? Are lazy money-grabbing tutors taking 9 grand a year from them and using chatGPT to generate their courses? Can students use similar tools that check if their materials have been created using chatGPT? Can I be one of these lazy money-grabbing tutors? I set off on an adventure to see if I could write a tool to automatically create courses from AI, to have a little think about some of the issues at hand.

At first, I wanted to write some code that would take any subject matter I gave it, and then use the chatGPT API to generate complete courses on that subject automatically. I was hoping the AI could take my job and I could have a 7-day weekend, but it turns out that chatGPT is still in a beta-like state, and doesn’t have an API yet. So, instead, I decided to do the same kind of thing manually, still using chatGPT, but creating a course by copying and pasting from facts generated by chatGPT instead of automating the process. I would be settling for a 6-day weekend instead.

Like most new things I play with nowadays, I decided to stream the process live, so the creation of the course was done with a small community on Twitch. This allowed other interested people could join in and chat about what the AI was doing. (On a side note, if you are an educator who doesn’t stream the interesting things you do, why not? Start now before the AI really does take your job!).

A course about castles

My fellow band of chatGPT pokers, ‘The Twitch Chat’, decided the subject matter for the course would be castles, and we would see if we could create a fully online course about them using chatGPT. We were going to become a tutor in castles, without knowing a thing about castles. Automate the process down the line. Become millionaires.

The was a set of rules devised by the Twitch channel chat, and tweaked as we went along, these were:

- The course content, questions and image were to be generated by AI tools, with chatGPT creating most of the content

- If chatGPT is unable to do what we request because of its limitations or because the site was currently overloaded, we could revert to a locally running version of GPT-2

- While chatGPT is in beta, we were allowed to get a trial copy of some e-learning package creation software ‘storyline’ and paste content from an AI tool into the took.

We didn’t ‘polish’ the final product and you are welcome to see what we ended up making in an hour or so by clicking here. However, it was the conversations and process that ended up holding the most value, and the course itself ended up being pretty.. poor. Anyway, here are some notes about our adventure.

Generating facts using chatGPT

We asked chatGPT for an array of introductions for the course so that we could pick one. Settling for the one it gave us when we asked for “an edgy introduction to a course about castles”, then proceeded in asking chatGPT for some facts about castles that we could simply copy and paste into our online course.

We started by asking chatGPT for some information and facts about Castles building regulations, it gave some facts about castle building regs, along with some nice intro and outro text. To go along we these facts, we needed some pictures of castles. I didn’t have any, so we used dall-e, openAI’s service to generate digital images to create, and pasted them in.

We went through same process with facts about moats and pasted them straight into the course, with accompanying moats facts. At this point we were pretty bored, we had discovered that you probably could do this automatically with an API, but decided that actually, that was something that we could have done quite easily using Wikipedia or any good book about castles. So, we decided to mix it up a bit, and asked chatGPT to give us some ‘false information’ about castles.

ChatGPT won’t lie

At first, we wanted to generate some false information so we could use it for assessment in true or false questions. But thinking it through, we talked about how, when we teach, it isn’t always about facts. To learn anything, you learn about the world first, and why the subject matter is important to us in the world we live in. We incorporate this into our teaching through humour, half-truths and the process of getting to know our audience. We looked for some funny half-truths or lies to use in our course and found that chatGPT does not like to lie. We were told off.

I found this quite interesting. Knowing that it won’t lie, we couldn’t help but wonder if perhaps there were instances in which students might prefer a course made automatically by chatGPT. Or maybe courses with a “facts provided by chatGPT” badge, to show that pesky academics weren’t filling student heads with out-of-date knowledge. Anyway, chatGPT’s refusal to tell anything but the truth wasn’t a problem, as I run an instance of GPT-2, an older version of the AI model that chatGPT is based on, on my own computer. In fact, it is very easy to get GPT-2 running on your machine to tinker with, and doing so means you no longer are required to use OpenAI’s cloud services. As such, I tinker with GPT-2 quite often and learnt that when I play with GPT-2 I poke it in certain ways, I’m not looking for truth, I’m looking to have a conversation with a statistical model of the world, partly trying to understand the data it is trained on, and partly just being playful. In contrast, chatGPT is only interested in giving you the facts, and a bit of fancy chat to accompany it, it doesn’t like to joke around. Using the two separate AI models was like playing different games with different rules.

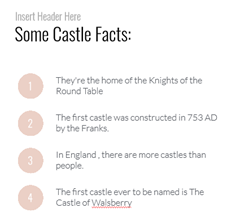

So, I reverted to GPT2 to generate some fake facts, and as I do, my mindset while using the AI tools also changes. We are no longer interested in the truth, but interested in the conversations that will occur as we discuss the output. I start to tweak some of the variables that hosting a local version of GPT-2 lets you tweak, never really certain what they do, but knowing that changing them gets interesting results. I like GPT-2, changing variables is like pulling random levers and turning random dials in the control room of the death star. I’m not sure what will happen, but it’ll be interesting. It’s a different experience to using chatGPT and raises questions about what AI is for. Is it for understanding the world, recovering facts, making decisions, to be playful? Whatever it’s for, we did manage to create some interesting facts about castles.

I think all these facts are false, but they are also funny, give us an insight into the data that was used for training, and promoted great conversations in our group around castles. We decided that this was out of the scope for what we wanted the course to be (that is, mostly AI generated) and deleted them. Except for the 3rd one, which we thought was hilarious.

Trying to add some critical thinking to the course

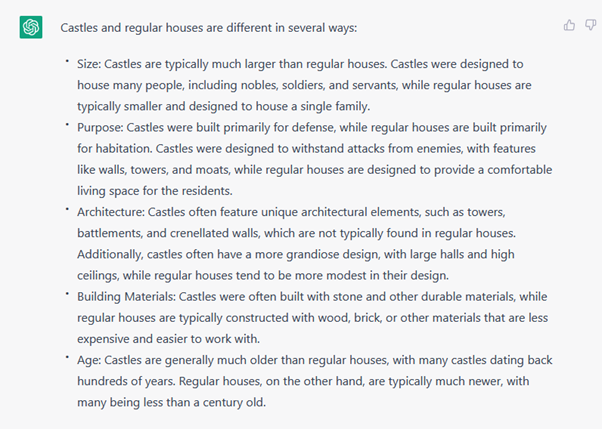

We returned to generating facts from chatGPT and realised that just copying and pasting facts from it was actually not much different to copying and pasting from other web-based resources. It made our life a little easier because all the information was there. But, really all we were gaining from chatGPT was some facts we could google wrapped up in nice text. Feeling like our course was a bit shallow, we tried to get it to do some thinking about castles, and asked it to compare castles to regular houses:

We wondered what it was doing behind the scenes to compare the two. I guessed that each item in the AI’s model has properties somehow and that it just writes about them the properties of each item with a bit of added fluff. We tried again comparing a castle to a banana, and it pretty much wrote the same thing.

Wrapping up

We added these comparisons to the course, added some assessment and called it quits. At this point, we were pretty bored with chatGPT’s predictable take on castles. It could get facts and sound human, that was pretty cool, but we had decided that it was nothing to be worried about, and probably not going to make us millionaires. Also, through a quick play, it seemed that similarly to the previous great mass panics of educational technology– The web, Encarta ’95, scientific calculators, the abacus – chatGPT probably won’t stop learners from learning.

Leave a Reply