I wanted to see how easy it was to create a twitch bot that would generate text using GPT-2, hosted locally, and provided by Hugging Face transformers. The idea is that the bot responds to twitch commands. It turns out it was very easy. Here is a simple guide for future me to follow when I get stuck again, but it might be useful to other people tinkering with this stuff. There are some things I am not 100% clear on, which are highlighted below, and comments putting me right would be helpful.

The things I had which you may or may not need, see the notes

- Anaconda (will need)

- Note: I used anaconda because PyTorch documentation seemed to recommend conda, and when I decided to ignore PyTorch recommendations and use Python/pip it didn’t play well. Unfortunately, the twitchio and hugging face transformers seemed to be only available in PyPI. So I’ve been using a mix of ‘conda install’ and pip. I don’t think this is a problem. Let me know if it is.

- A CUDA graphics card (don’t think you need it!)

- Note: Obviously, GPT-2 is pretrained, so I don’t think you need a CUDA graphics card, but I’ve set up PyTorch to work with it anyway, and these notes assume you are too. Comment to let me know if you know if you need a CUDA graphics card for this to work

- The CUDA toolkit

- Note: To set up CUDA to work with PyTorch, so if you have a CUDA graphics card and are following those instructions, then you to get this too.

Step 1 Set up Conda

If you haven’t already, install anaconda. Once installed, create an environment to work in. Do this by opening Anaconda Prompt and using the following command

conda create --name twitch_botThis will create a conda virtual environment for us to muck up!

Step 2 Start a project

Navigate to wherever you want to create your project on your local machine. Before we install dependencies, we are going to create a quick script that just checks if CUDA is installed properly. In your working directory create a python script, I called mine bot.py, and stick in the following:

import torch

#check if using cuda

print(torch.cuda.is_available())Step 3: Setup dependencies

In your Anaconda Prompt, activate your twitch_bot environment

Conda activate twitch_botHead to https://pytorch.org/ fill in the details for the version of CUDA you want, and copy the conda command, mine was:

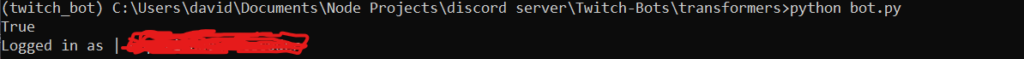

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorchNow in Anaconda Prompt, change the directory to the location of bot.py, and type “python bot.py”. The above script should print “true” to Anaconda prompt. This means that PyTorch has checked if Cuda is available and has said yes. If it says “false”, then something has gone wrong, either you don’t have Cuda available, or it is not set up right.. but.. it might not be a problem.

Now we will install twitchio and transformers. head back to your Anaconda Prompt and type

Pip install twitchio

Pip install transformersStep 4: Setup bot account

You need a twitch account, so head over to https://www.twitch.com and sign up, this will be the account of your bot. Next head over to https://twitchtokengenerator.com/ (while signed in to twitch) and click ‘bot chat token’ when it asks you. It should give you three keys, copy the access token, create a file called config.py in the same folder and type this into it, replacing <TOKEN> with your actual token

TOKEN= "<TOKEN>"Step 5: Write a basic bot class

The below code shows a basic bot that will listen out for two commands. !hello that is going to just say “Hello” and a second one which is going to generate superhero text, based on the user’s name, and print it to the screen. For your bot, you will have to replace <CHANNEL_NAME> with the name of the channel that the bot will be run on. The code is quite straightforward, and while I am planning on doing a short video explaining you want the twitch.io and huggingface documentation for now.

import torch

import config

from twitchio.ext import commands

from transformers import pipeline

#check if using cuda

print(torch.cuda.is_available())

#generator = pipeline('text-generation', model = 'gpt2')

# jsonresults = generator(f"Describe a superhero {username} [endprompt]", max_length = 150, num_return_sequences=3)

#print((jsonresults[1]['generated_text']))

class Bot(commands.Bot):

#currentbot

def __init__(self):

# Initialise our Bot with our access token, prefix and a list of channels to join on boot...

# prefix can be a callable, which returns a list of strings or a string...

# initial_channels can also be a callable which returns a list of strings...

super().__init__(token=config.TOKEN , prefix='!', initial_channels=['#<CHANNEL_NAME>'])

async def event_ready(self):

# Notify us when everything is ready!

# We are logged in and ready to chat and use commands...

print(f'Logged in as | {self.nick}')

@commands.command()

async def hello(self, ctx: commands.Context):

await ctx.send(f'Hello {ctx.author.name}!')

@commands.command()

async def superhero(self, ctx: commands.Context):

superherotext = self.gen_superhero(ctx.author.name)

stringlen = 450

strings = [superherotext[i:i+450] for i in range(0, len(superherotext),stringlen)]

for sts in strings:

await ctx.send(f'{sts}')

def gen_superhero(self, username):

#write the code to generate a superhero

generator = pipeline('text-generation', model = 'gpt2')

jsonresults = generator(f"Describe a superhero called {username}: {username} is ", max_length = 150, num_return_sequences=3)

return((jsonresults[1]['generated_text']))

bot = Bot()

bot.run()Step 6: Run the bot, connect and play

Goto your chat channel on twitch by heading to twitch/<username> and clicking chat. You can then connect the bot by running your python script by going to the Ananconda Prompt and running your bot again by typing “python bot.py”. Your prompt should say something like this:

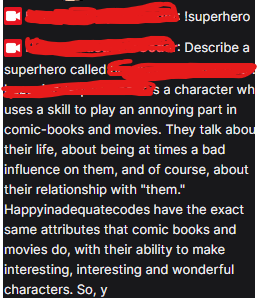

Now you can run your !superhero command like so

Easy!

Leave a Reply